Understanding Artificial Intelligence and Neural Networks: A Comprehensive Guide

June 14, 2024 | by zolindar@gmail.com

Photo by Mahdis Mousavi on Unsplash

Photo by Mahdis Mousavi on Unsplash Introduction to Artificial Intelligence

Artificial Intelligence (AI) refers to the simulation of human intelligence in machines that are programmed to think and learn like humans. This technology has become a pivotal part of modern society, influencing various sectors from healthcare to entertainment. The concept of AI has been around since the 1950s, with the term first coined by John McCarthy. Over the decades, AI has evolved significantly, marked by key milestones such as the development of the first neural networks, the creation of expert systems in the 1980s, and the advent of machine learning and deep learning in recent years.

AI can be categorized into three main types: narrow AI, general AI, and superintelligent AI. Narrow AI, also known as weak AI, is designed to perform a specific task, such as facial recognition or internet searches. General AI, or strong AI, aims to understand, learn, and apply knowledge across a wide range of tasks, akin to human cognitive abilities. Superintelligent AI, a theoretical form, would surpass human intelligence in virtually every aspect, from creativity to problem-solving.

Despite its growing prevalence, AI is often misunderstood. A common misconception is that AI systems possess human-like consciousness or emotions. In reality, current AI operates based on algorithms and data, without true understanding or awareness. Ethical considerations are another critical aspect of AI development. Issues such as data privacy, bias in algorithmic decision-making, and the potential for job displacement necessitate ongoing dialogue and regulation.

The impact of AI on industries and daily life is profound. In healthcare, AI assists in diagnosing diseases and personalizing treatments. In finance, it aids in fraud detection and algorithmic trading. AI-powered automation is transforming manufacturing, while in everyday life, virtual assistants like Siri and Alexa have become ubiquitous. As AI continues to advance, its potential to drive innovation and address complex challenges grows, making it an indispensable component of the future technological landscape.

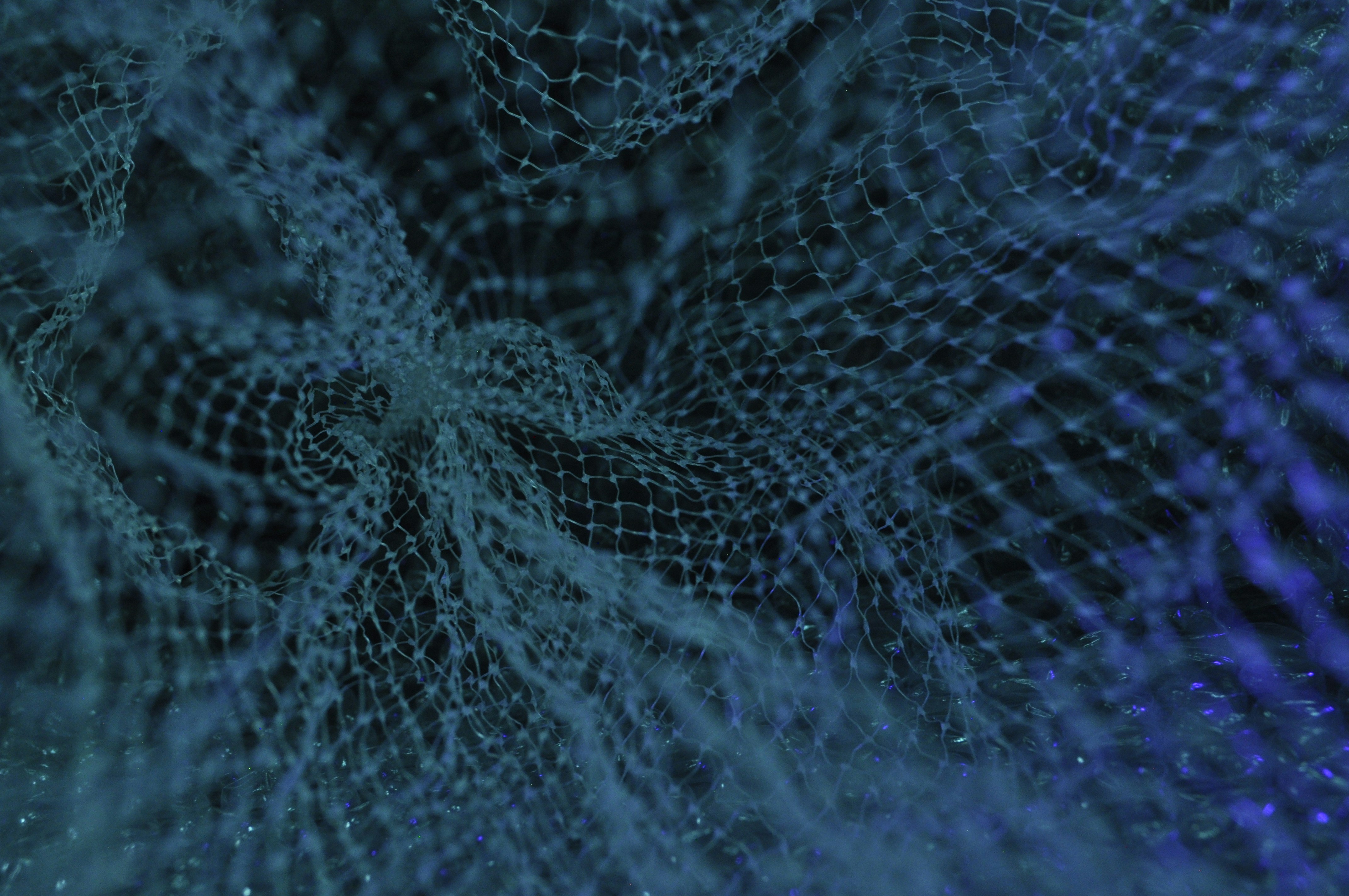

Exploring Neural Networks

Neural networks are a pivotal component of artificial intelligence, designed to mimic the human brain’s structure and function. They consist of interconnected nodes, or “neurons,” which process data by passing it through layers of neurons. This architecture enables neural networks to learn from data, identify patterns, and make decisions.

Neural networks can be categorized into various types, each with unique structures and applications. Feedforward neural networks are the simplest form, where data moves in one direction, from input to output. These networks are typically used in applications such as pattern recognition and simple classification tasks.

Convolutional neural networks (CNNs) are specifically designed for processing structured grid data, such as images. CNNs use convolutional layers that apply filters to the input data, capturing spatial hierarchies in images. This makes them highly effective for image recognition tasks, such as identifying objects within photographs or facial recognition systems.

Recurrent neural networks (RNNs) have loops in their architecture, allowing information to persist. This characteristic makes RNNs suitable for sequential data tasks, such as language modeling, speech recognition, and time-series prediction. By maintaining a ‘memory’ of previous inputs, RNNs can make informed predictions based on historical data.

The process through which neural networks learn involves several key concepts. Each neuron in a network takes input data, processes it using an activation function, and passes the result to the next layer. Common activation functions include ReLU (Rectified Linear Unit), Sigmoid, and Tanh, each contributing to the network’s ability to model complex relationships.

Backpropagation is a fundamental algorithm used to train neural networks. It works by calculating the error at the output and propagating it back through the network, adjusting the weights of the connections between neurons to minimize this error. Training algorithms, such as gradient descent, play a crucial role in optimizing these weight adjustments to enhance the network’s accuracy.

Neural networks have numerous real-world applications. In image recognition, CNNs power systems that can classify images and detect objects, revolutionizing fields such as medical imaging and autonomous driving. In speech recognition, RNNs enable virtual assistants like Siri and Alexa to understand and respond to spoken language. These examples underscore the transformative impact of neural networks across various industries.

RELATED POSTS

View all