Understanding Artificial Intelligence and Neural Networks

June 14, 2024 | by zolindar@gmail.com

Introduction to Artificial Intelligence

Artificial Intelligence (AI) represents one of the most transformative technologies of the 21st century. Defined as the simulation of human intelligence processes by machines, particularly computer systems, AI encompasses a range of techniques and approaches. The concept of AI dates back to the mid-20th century, with the term first coined by John McCarthy in 1956. However, the roots of AI can be traced even further back, to ancient myths of automata and more modern discussions by visionaries like Alan Turing.

AI can be broadly categorized into two types: narrow AI and general AI. Narrow AI, also known as weak AI, refers to systems designed to perform a specific task, such as facial recognition or language translation. These systems are highly effective within their defined boundaries but lack the ability to perform tasks outside their domain. General AI, or strong AI, is a more advanced form of AI that possesses the ability to understand, learn, and apply knowledge across a wide range of tasks, similar to human cognition. While narrow AI is prevalent today, general AI remains a theoretical concept and a subject of ongoing research.

The applications of AI are vast and varied, impacting numerous industries. In healthcare, AI assists in diagnosing diseases, predicting patient outcomes, and personalizing treatment plans. The finance industry leverages AI for fraud detection, risk management, and automated trading. In transportation, AI powers autonomous vehicles, optimizes traffic management, and enhances logistics efficiency. These examples merely scratch the surface of AI’s potential, as its capabilities continue to grow and evolve.

While AI offers significant benefits, it also raises ethical considerations and potential societal impacts. Issues such as data privacy, job displacement, bias in AI algorithms, and the need for regulatory frameworks are critical areas of concern. As AI technology progresses, it is essential to address these challenges to ensure that AI serves humanity responsibly and equitably.

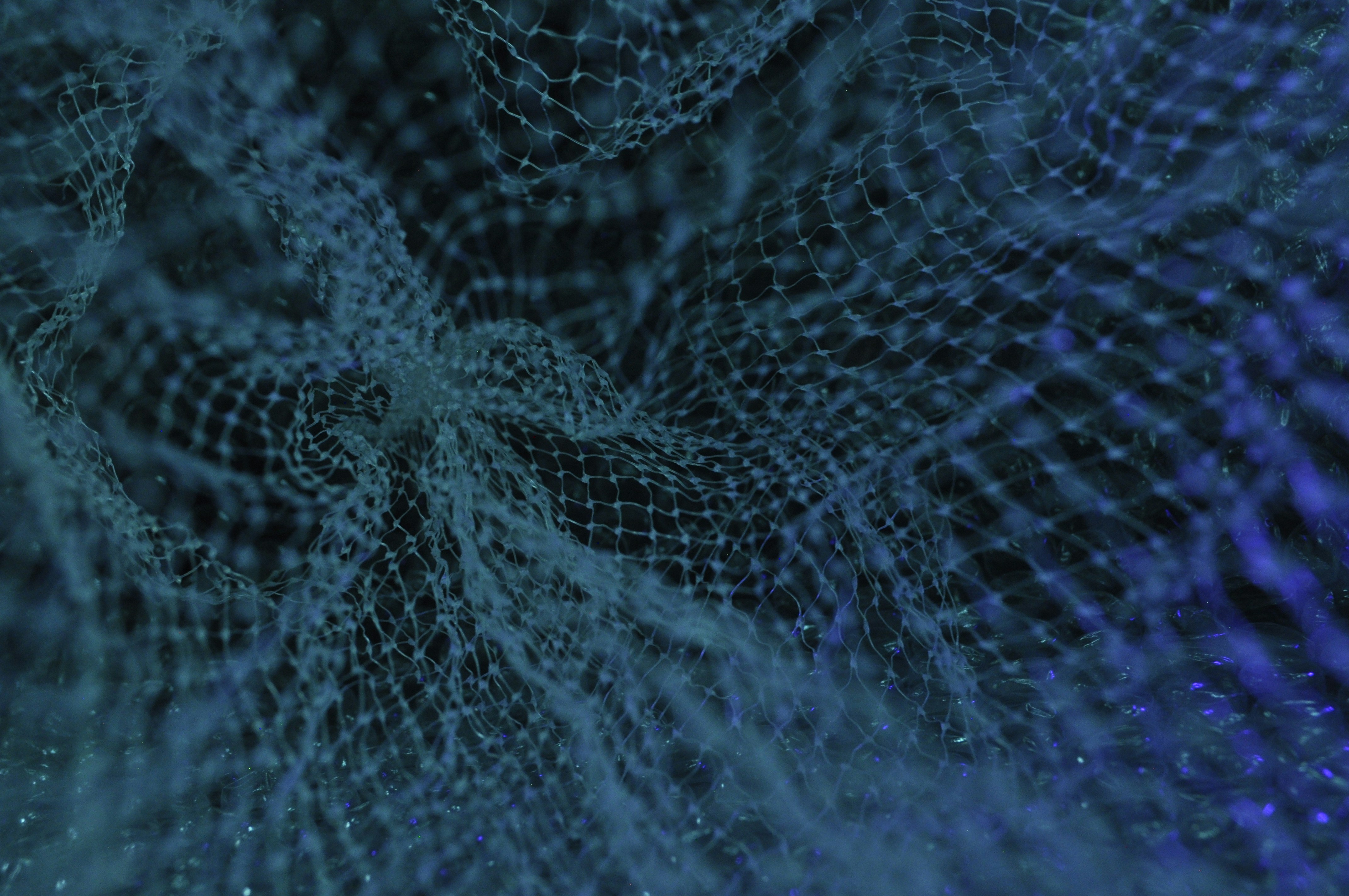

Exploring Neural Networks

Neural networks, a cornerstone of artificial intelligence, are computational models inspired by the human brain’s architecture and functioning. These networks comprise interconnected nodes, or “neurons,” organized into layers. Each neuron processes input data and passes the output to the subsequent layer, enabling the network to learn and make decisions. The fundamental components of neural networks include input layers, hidden layers, and output layers, interconnected by weighted connections that adjust during the learning process.

Several types of neural networks exist, each tailored for specific tasks. Feedforward neural networks are the simplest form, where data flows in one direction from input to output. These networks are effective for tasks like regression analysis and classification. Convolutional neural networks (CNNs), on the other hand, are designed for image and video processing. They utilize convolutional layers to detect spatial hierarchies in data, making them ideal for tasks such as image recognition and object detection. Recurrent neural networks (RNNs) possess the unique ability to process sequential data by maintaining a form of memory. This characteristic makes them suitable for applications like natural language processing and time-series forecasting.

Practical applications of neural networks are vast and transformative. Image recognition systems, powered by CNNs, are employed in areas ranging from medical diagnostics to automated tagging in social media platforms. Natural language processing (NLP), often utilizing RNNs, is integral to virtual assistants, translation services, and sentiment analysis tools. Moreover, autonomous vehicles rely on a combination of neural network types to interpret sensory data, make real-time decisions, and navigate complex environments safely.

The training process of neural networks is crucial for their effectiveness. This involves feeding the network large datasets and iteratively adjusting weights to minimize errors. Key concepts in this process include backpropagation, a method for calculating the gradient of the loss function, and gradient descent, an optimization technique to update weights. Overfitting, where a model learns the training data too well and performs poorly on new data, is a common challenge that can be mitigated through techniques such as dropout and regularization.

RELATED POSTS

View all