Exploring Artificial Intelligence and Neural Networks: A Comprehensive Guide

June 14, 2024 | by zolindar@gmail.com

Understanding Artificial Intelligence: Origins, Types, and Applications

Artificial Intelligence (AI) has its roots in the mid-20th century, with early research efforts aimed at simulating human intelligence through computational means. The field has evolved significantly since its inception, marked by milestones such as the Turing Test proposed by Alan Turing in 1950 and the development of the first AI programs in the 1950s and 1960s. The progression of AI research has led to the categorization of AI into three primary types: narrow AI, general AI, and superintelligent AI.

Narrow AI, also known as weak AI, is designed to perform specific tasks. Examples of narrow AI include virtual assistants like Siri and Alexa, recommendation algorithms used by streaming services, and autonomous vehicles. These systems are efficient at handling particular functions but lack the ability to generalize knowledge across different domains.

General AI, or strong AI, aims to match human cognitive abilities, enabling machines to understand, learn, and apply knowledge in a manner akin to human reasoning. While general AI remains largely theoretical, research endeavors continue to push the boundaries of machine learning and cognitive computing to achieve this sophisticated level of intelligence.

Superintelligent AI represents an advanced form of artificial intelligence that surpasses human intelligence across all aspects, including creativity, problem-solving, and emotional intelligence. This level of AI is a subject of extensive debate and speculation, with potential implications that could fundamentally reshape society.

AI applications are pervasive across various industries. In healthcare, AI-driven diagnostics and personalized treatment plans are revolutionizing patient care. The finance sector benefits from AI in fraud detection, risk management, and algorithmic trading. The automotive industry is experiencing a transformation through the development of self-driving cars, while entertainment platforms leverage AI for content creation and user engagement.

Technologies such as machine learning, deep learning, and natural language processing are pivotal in these advancements. Machine learning enables systems to learn from data, deep learning involves neural networks with layered architectures for complex pattern recognition, and natural language processing allows machines to understand and generate human language.

However, the integration of AI into daily life raises ethical considerations and societal impacts. Issues such as data privacy, job displacement, and the potential for biased decision-making necessitate careful governance and ethical guidelines. As AI continues to evolve, it is imperative to address these challenges to ensure that AI technologies are developed and deployed responsibly.

Neural Networks: The Backbone of Modern AI

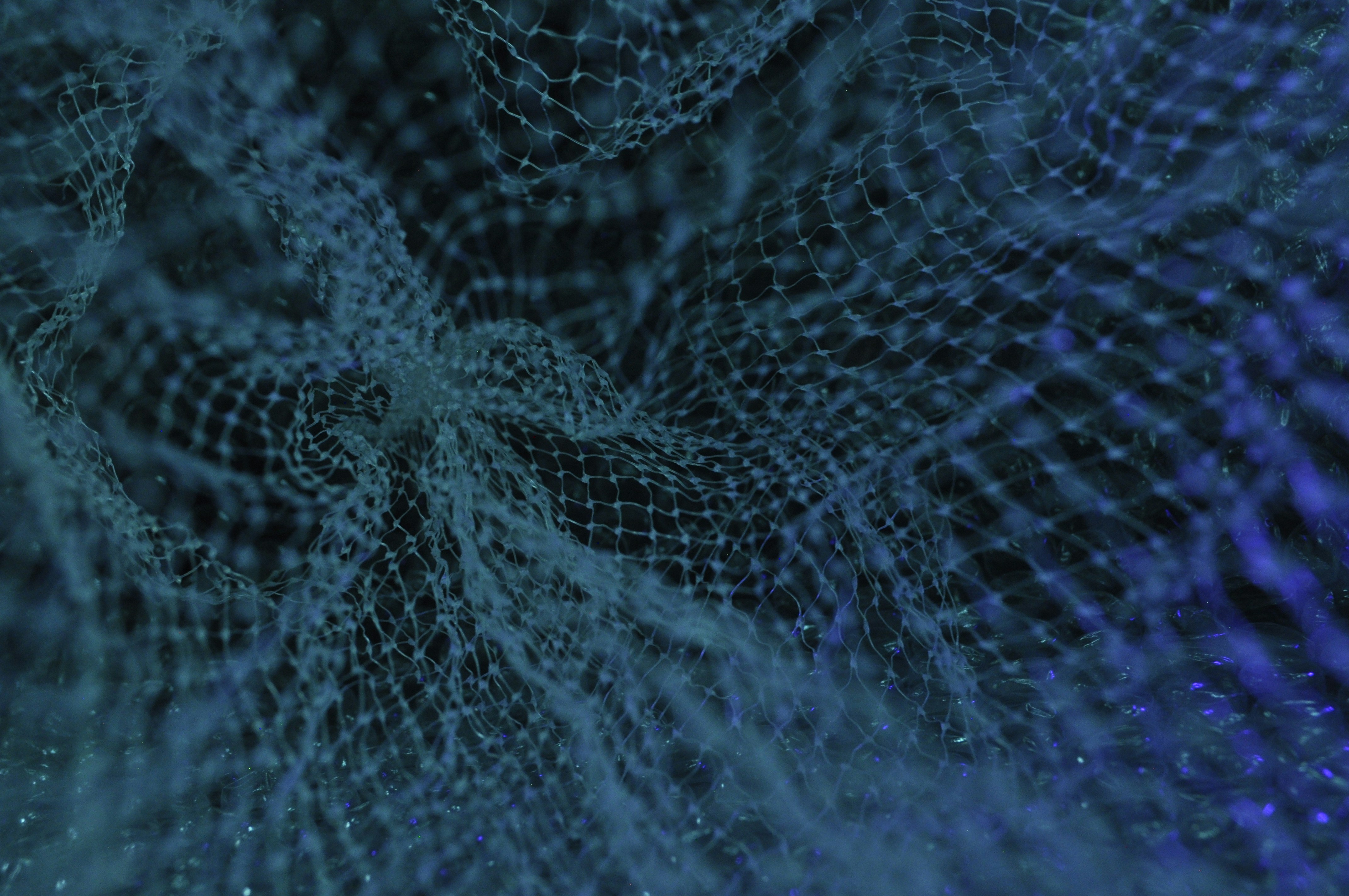

Neural networks form the cornerstone of contemporary artificial intelligence, emulating the complex web of neurons in the human brain. Essentially, a neural network is a computational model composed of interconnected units or nodes, akin to neurons, which process data by responding to input and transmitting information across layers to produce an output. These networks are designed to recognize patterns and make decisions based on data, facilitating advancements in numerous AI-driven applications.

Different types of neural networks serve various purposes, each with unique structures and functionalities. Feedforward neural networks are the simplest type, where data moves in one direction—from input to output—without looping back. These are commonly used in straightforward classification tasks. Convolutional neural networks (CNNs) are especially effective in image and video recognition. By employing convolutional layers, CNNs can detect and learn features from visual data, making them indispensable in computer vision. Recurrent neural networks (RNNs), on the other hand, are designed for sequential data processing, such as time series analysis and natural language processing. They possess the ability to maintain a ‘memory’ of previous inputs, enabling them to predict future values in a sequence.

The training process of neural networks involves several critical concepts. Backpropagation is a fundamental algorithm used to minimize errors by adjusting the weights of the network based on the error rate of the output. This is often combined with gradient descent, an optimization technique that iteratively updates the weights to find the minimum error. However, training neural networks can lead to overfitting, where the model performs well on training data but poorly on new, unseen data. Techniques such as dropout and regularization are employed to mitigate this issue.

Neural networks have demonstrated remarkable capabilities in real-world applications. In image and speech recognition, these networks can identify objects and transcribe spoken words with high accuracy. Natural language processing benefits from neural networks’ ability to understand and generate human language, enhancing chatbots and translation services. Autonomous systems, including self-driving cars, rely on neural networks to interpret sensor data and make real-time decisions.

Despite these advancements, challenges persist in neural network research. Issues such as the need for vast amounts of data, computational power, and the interpretability of models remain significant hurdles. Future developments may focus on creating more efficient training methods, improving model transparency, and developing neural networks that require less data, pushing the boundaries of what AI can achieve.

RELATED POSTS

View all